android media framework is built on top of a set of media libraries, including

OpenCORE,

vorbis and sonivox. So one of goal of android media framework is to provide a consistent interface for all services provided by underlying libraries and make them transparent to users.

The figure below shows the dependency relationships between libraries of the media framework.

In this figure, green components are media libraries, yellow components are android internal libraries, grey components are external libraries, and the light blue class is the java consumer of the media framework. Except for android.media.MediaPlayer class, all components are implemented in c or c++.

The core of the media framework is composed of libmedia, libmediaplayerservice and libmedia_jni. Their codes reside in frameworks/base/media folder.

libmedia defines the inheritance hierarchy and base interfaces. It’s the base library.

libmedia_jni is the shim between java application and native library. First, it implements the JNI specification so that it can be used by java application. Second, it implements the facade pattern for the convenience of caller.

libmediaplayerservice implements some of concrete players and the media service which will manage player instances.

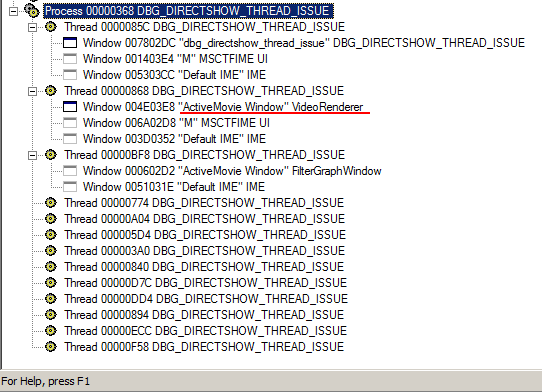

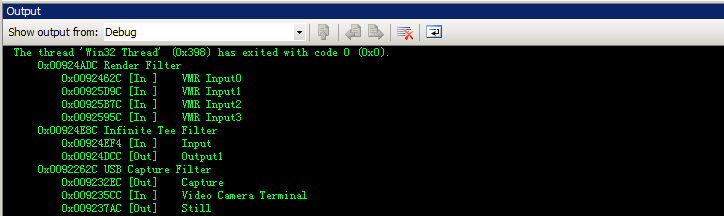

The figure below shows the class hierarchy.

This is a simplified version of the class hierarchy. Only some core classes are included. Classes in blue are defined in libmedia, classes in green are defined in libmediaplayerservice, classes in light yellow are defined in binder, which implements the IPC on android. And classes in grey are defined in other libs.

Note the BpInterface and BnInterface are template classes. Any instantiation of them also inherit the template argument INTERFACE as well.

In the class hierarchy diagram, though listed as a separate module, binder is actually implemented inside libutils component whose source code locate at /frameworks/base/libs/utils folder.

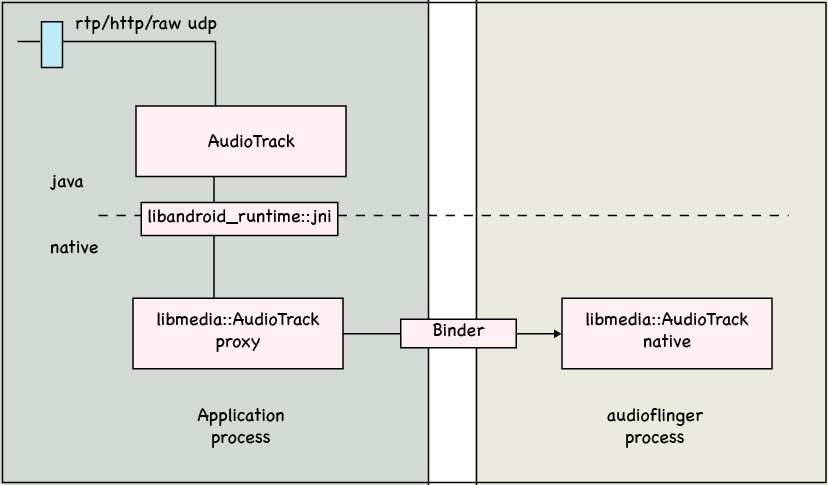

An interesting thing to note is in android, the application that intends to show the media content and the player that actually renders the media content run in different process. The red line in the sequence diagram below shows the boundary of two processes.

The figure shows three most common operations, creating a new player, setting datasource and playing. The last MediaPlayerBase object is the interface that MediaPlayerService::Client object uses to refer to the concrete player instance. The concrete player can be VorbisPlayer, PVPlayer, or any other player, depending on the type of the media to be played.

When an application creates a android.media.MediaPlayer object, it’s actually holding a proxy which can be used to manipulate the concrete player resides in the mediaserver process. During the whole procedure, two process communicates with Binder IPC mechanism.

Having knowledge above, it’s not difficult to understand why MediaPlayer doesn’t provide an API to use memory stream as source. Because the memory manipulated by the stream is in the address space of the application, and it’s not directly accessible by the mediaserver process.

References:

Google I/O, Mastering the Android Media Framework

android media framework uml diagram